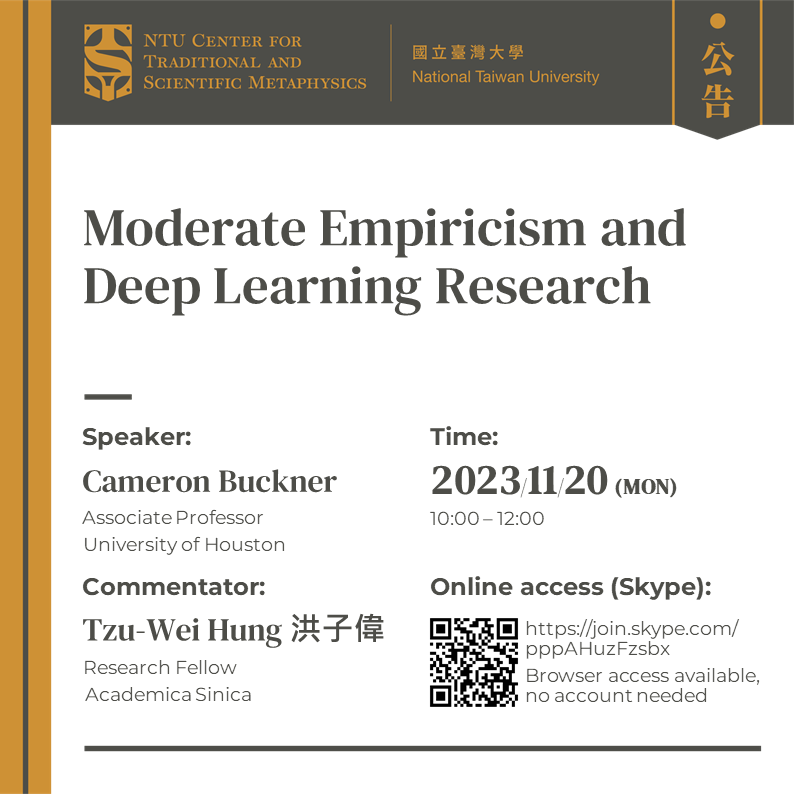

Moderate Empiricism and Deep Learning Research

Cameron Buckner (with commentary by Tzu-Wei Hung 洪子偉)

Skype link: https://join.skype.com/pppAHuzFzsbx

Abstract: In recent years, deep learning systems like AlphaGo, AlphaFold, DALL-E, and ChatGPT have blown through expected upper limits on artificial neural network research, which attempts to create artificial intelligence in computational systems by replicating aspects of the brain’s structure. These deep learning systems are of unprecedented scale and complexity in the size of their training set and parameters, however, making it very difficult to understand how they perform as well as they do. In this talk, I outline a framework for thinking about foundational philosophical questions in deep learning as artificial intelligence. Specifically, my framework links deep learning’s research agenda to a strain of thought in classic empiricist philosophy of mind. Both empiricist philosophy of mind and deep learning are committed to a Domain-General Modular Architecture (a “new empiricist DoGMA”) for cognition in network-based systems. In this version of moderate empiricism, active, general-purpose faculties–such as perception, memory, imagination, attention, and empathy–play a crucial role in allowing us to extract abstractions from sensory experience. I illustrate the utility of this interdisciplinary connection by showing how it can provide benefits to both philosophy and computer science: computer scientists can continue to mine the history of philosophy for ideas and aspirational targets to hit on the way to more robustly rational artificial agents, and philosophers can see how some of the historical empiricists’ most ambitious speculations can be realized in specific computational systems.

Bio: Cameron Buckner is an Associate Professor in the Department of Philosophy at the University of Houston. His research primarily concerns philosophical issues which arise in the study of non-human minds, especially in the fields of animal cognition and artificial intelligence. He began his academic career in logic-based artificial intelligence. This research inspired an interest into the relationship between classical models of reasoning and the (usually very different) ways that humans and animals actually solve problems, which led him to the discipline of philosophy. He received a PhD in Philosophy at Indiana University in 2011 and an Alexander von Humboldt Postdoctoral Fellowship at Ruhr-University Bochum from 2011 to 2013. Recent representative publications include “Empiricism without Magic: Transformational Abstraction in Deep Convolutional Neural Networks” (2018, Synthese), and “Rational Inference: The Lowest Bounds” (2017, Philosophy and Phenomenological Research)—the latter of which won the American Philosophical Association’s Article Prize for the period of 2016–2018. He has just finished a National Science Foundation grant to complete a book which uses empiricist philosophy of mind (from figures such as Aristotle, Ibn Sina, John Locke, David Hume, William James, and Sophie de Grouchy) to understand recent advances in deep-neural-network-based artificial intelligence, which will be published with Oxford University Press in November 2023.

Book page link: https://global.oup.com/academic/product/from-deep-learning-to-rational-machines-9780197653302